AWS, Azure, Gcloud, DigitalOcean… Cloud is being used every day and offers (almost) unlimited capacity… as long as we pay the bill… And some time bill can give us some surprises. Cloud providers make it easy to configure instances, database, store data.. But it can be very costly if you don’t keep an eye on it.

In this article we’ll give you some tips and good practices that could be usefull to save money

As a good start here are some general rules you should follow:

General rules:

- Configure a main account dedicated to billing, and then configure subaccounts/projects linking invoice to that account

- In each account/project, configure a budget and an alert if consum exceeds a certain amount (to be defined depending on your usual consume) (AWS, GCP, Azure. This way you’ll be warned during the month… and not at the end receiving bill.

- If your account has several projects/environments, use labels/tags to be able to identify each resource.

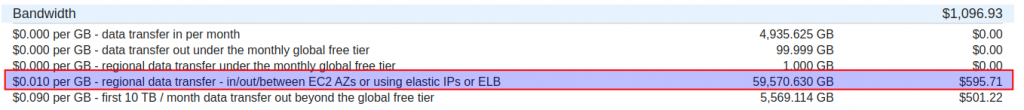

- Export your billing to a database (Athena / Bigquery ) This has a cost (not really expensive if you don’t pass your day querying it) but allows you to have much more details in some cases. For instance in this AWS invoice we can see 595$ of datatransfer out without any details.

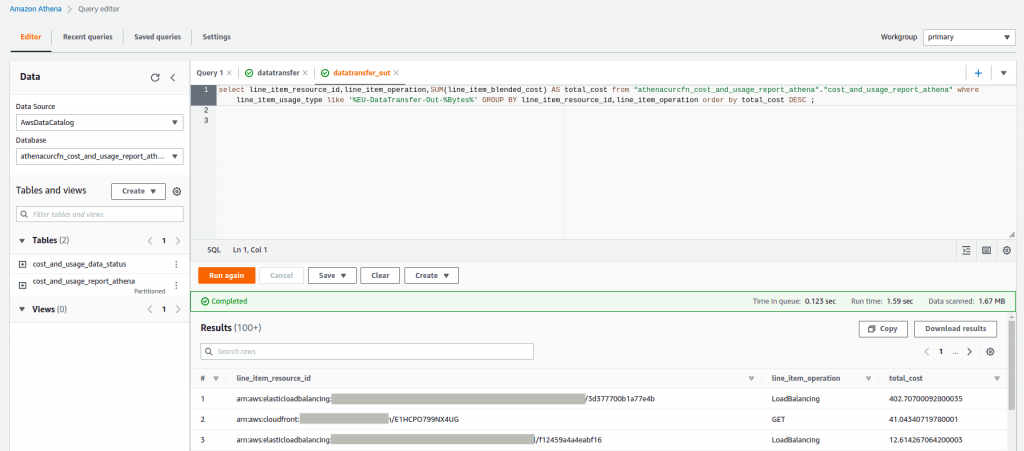

If you go on Athena, we can see that this is mainly due to a loadbalancer.

If we want to save money on that resource, we know where to start.

Finaly review periodically your bills (every 3/6/12 months)

Bill review:

One of best automatic tool you could use is CloudCheckr. After configuring an account in your project it gives you instances that are oversized. Bad thing is that:

- It’s done checking CPU but not RAM. One instance with few CPU usage but full of RAM can’t be resized.

- It doesn’t take into “auto-scalling” instances that are up and down automatically

So another way is to check bill manually. Go to billing details, and review costs, starting by highest one to lowest. Here are some question you can ask yourself for each service

S3:

- Is versioning active and needed? Versioning on a backup’s bucket can be very costly for instance

- All files are regularly accessed? If not you can implement lifecycle to save money in buckets with lots of data

- Files can be deleted after xx days? If so you can also implement a lifecycle

Instances:

- Check CPU / RAM use in your instances and resize your instances if possible

- Is autoscalling correctly configured? You may not need that much instances always up.

- Could you stop some instances at night / on week ends?

Volums:

- Check unmounted volums and delete them if they are useless

Databases:

- Check CPU / RAM use and resize them if possible

Load balancer:

- Check if loadbalancers could be group in a single one.

- All loadbalancers are usefull? (one loabalancer with a single instance, or returning 200 could be useless)

Those are example for some services. Obviously each case is diferent, so if you’re still lost with all of this, feel free to contact us. We could help you to configure everything.

Instance reservation

Finally, once infrastructure is correctly provisioned (Instances with correct sizing, up/down xx hours per day) we can reserve instances. In other words you commit to keep instances for a certain time, and eventually paying upfront. In exchange you’ll have some discounts. The longest we commit and the most we pay upfront… The most will be our discount.

But we’ll see that in an incoming article.

We hope that this article has been useful to you and we are very attentive that soon we will continue publishing more related content.

From Geko we can adives you to maximize cost savings in your cloud system.

If you would like to get to know us better, check out our services. Please do not hesitate to contact us if you require further information