Geko Cloud’s security team recently visited RootedCON, Spain’s most important security conference, and among other things, there was a lot of talk about one of the weakest links in application development pipelines: people.

One of the most important mantras to remember when creating a cyber risk model for your company is that you’re never going to have a completely secure application ecosystem. As many penetration tests you run and as many pipeline code quality tests you implement, something’s always left along the way. Sometimes it’s because the return of investment in fixing it is not worth the time, sometimes the tests don’t catch the problem because it’s dependant on the interaction of multiple errors on the system interacting with each other. Complex systems fail, that’s something you cannot avoid. You can control what software you use, what code runs, but a slip-up is only a matter of time.

Why do I apply these security guardrails then?

This is why your development and security plan needs to prioritize containment and isolation: if you assume that something is going to happen, prepare your environment so that the incident has as little impact as possible. A user got a chell to your website? Can’t do much as a well-scoped webserver user. Production database server access? Well, but only for some databases and tables, no administrator rights. Minimize impact area and prepare for damage control, those should be the cornerstones of your fight against cyberattacks.

One of the ways you can implement this security guardrail control is to use specialized security tools that protect against common attacks from threat actors, that in a simple and unattended way will detect, alert and stop external attacks, either from bots or from sophisticated threat actors. Another layer of protection that, if maybe it doesn’t stop every attack, it can save you more than one incident, or cut an exploit chain. Everything without the need of having your security team check every false positive and dedicate their time to more quality work.

What part of my environment do I begin with?

In this case the biggest attack surface we usually need to aproach is websites. Every company today has a web application that they need to use, be it internal or a user-facing corporate site. Whichever it is, it can represent a pivoting point in an incident. To avoid this in web environments exposed to threat actors we need appropiate tools that detect common attacks and stop them. In our case, after an analysis of the available options for this scenario, we’ve found OWASP’s ModSecurity Core Rule Set.

ModSecurity Core Rule Set is a pack of rules maintained by the Open Web Application Security Project Foundation that keeps a detection ruleset aligned with what this world-renowned organization considers the most dangerous attack vectors against modern web applications. These rules are loaded with the ModSecurity module on your web server (nginx, apache2, iis server) and intercepts and analyzes requests and responses that reach your site, then takes alert or block decisions based on its priorities and threat scores.

Should I migrate my current webserver to one with ModSecurity?

Thanks to the modular implementation of ModSecurity this tool can be applied in existing webservers in a simple with a low amount of overhead in the site’s architecture. It’s quickly implemented in the environment that you already have set up and allows you to immediately start processing alerts and blocking attacks. As it doesn’t modify the core functionality of your webserver you can reuse most of your current configuration, making its implementation in your existing environment a low-friction improvement.

How do I enable this in my pre-existing image?

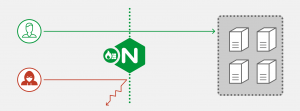

If you don’t have a monolithic webserver set-up, don’t fret, this isn’t a show stopper. Even in a microservice architecture you can still apply the pre-existing solutions created by the open-source community that actively contributes to the Core Rule Set project, you can use drop-in solutions for almost any environment you come from.

Starting up your site with docker-compose? the modsecurity-crs docker image that’s kept updated in the owasp docker repository implements the Core Rule Set set-up on top of the nginx container that you already know and love. Kubernetes fan? You probably already noticed at Geko we are too, and we recently discovered that the nginx-ingress-controller helm chart already implements ModSecurity and Core Rule Set support built-in, you just need to enable a feature flag and without downtime and a couple of tags added to your ingress objects you’ll sleep better knowing you just put one more stone in a cybercriminal’s path to your information.

How do these blocks work, exactly?

ModSecurity handles every request through 4 phases of security controls. In the first layer it applies the CoreRuleSet rules that look for compromise indicators on HTTP request headers. Details like using a PUT where only GETs should be accepted, unwanted protocols or SQL injections in the URL are examples of checks implemented on this first step. After this, if score isn’t high enough to drop the request, it will continue to check for the request body for alarms like malicious data in POST requests. If these checks raise no alarms, the request will be forwarded to the application for processing.

But that’s only the first half of communication, and some requests could get through if they’re obfuscated enough, like base64-encoded requests. To further avoid these potential detection leaks, response data from the application is also checked. Detection of headers with sensitive information, or an abnormally large body field that could be an SQL dump or a binary execution, would never get back to the attacker, as ModSecurity will discard this response before it got back to the threat actor.

How sensitive are these detection rules? Can I tune them?

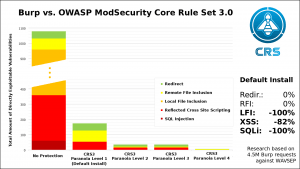

ModSecurity implements two main elements to control the blocking sensitivity and handle false positives: Score and Paranoia Level. The first one is a stored score assigned to each rule on the CoreRuleSet that defines how critical the incident is: the alarm level from getting a PUT on a GET-only endpoint is not the same as detecting a log4java command injection on a form submit. Score is accumulated accros the communication, and if it goes over the limit, ModSecurity alerts about the incident or cuts the connection.

To control the needed score to alert about an incident or block a request ModSecurity uses Paranoia Level, an environment variable in the ModSecurity setup that, in a level from 1 to 4,, is configured as more or less sensitive to alerts, level 1 being the lowest that requires a score of 5 (the most critical score) to act on a request, and 4 being the highest paranoia level which only requires a score of 1, the lowest in any given rule. The higher the paranoia level, the more secure the environment is, but the more false positives we will get.

But we may not always want to block or allow the same rule level. Sometimes we want to debug without interrupting service. To help with this there’s a special environment variable: the Executing paranoia level. This variable establishes the score and paranoia level required to alert about a rule, but allowing the request to continue. For example, setting paranoia level to 5 and executing paranoia level to 1 will alert of every single rule trigger on the environment, but only interrupt communications for the most critical events that are detected so we can debug and tune rules without service interruption.

That sounds like a lot to take in…

Here at geko we’ve already been fighting with ModSecurity tuning and configuration for a bit. If you want to implement this security suite in your environment but you don’t have the time to handle false positives let’s have a chat and we’ll take care of making your environment more secure so that your team can dedicate their time to their tasks without having to worry about a cybercriminal slipping into the cracks of your infrastructure. ModSecurity will catch and stop problems that you don’t even know exist.