Introduction

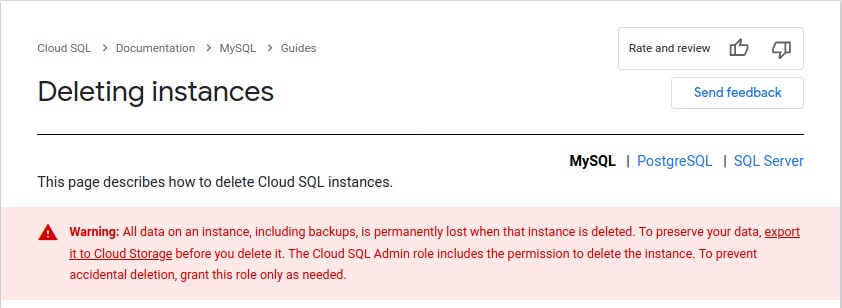

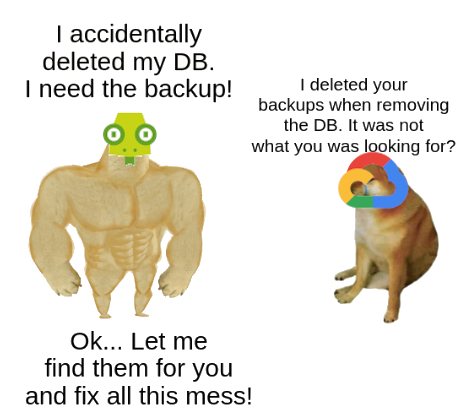

It all started with a simple message: “Hello Geko, we are receiving a DB connection timeout“. It took less than 2 minutes to discover what was going on: The database was deleted. Lots of facepalms after, the main task was to recover the data (and also the service). Fortunately, Google enforces the backup policies to always copy the database once per day. At Geko, we all agree with this policy. So the thing is we went to look for the backups and the nightmare began. There were no backups! There was nothing! And then we found out backups are strongly bounded to the database resource, so if the database gets deleted the backups also do. Google states it so clear on their docs for Cloud SQL.

We are not going to go deeper on this post about how the database got deleted. Just tell it was an automated process which detected a disk size increase and when trying to go back to a previous, smaller size an entire database replacement was required. There was no opportunity to accept it nor stop it, so the situation was what it was:

- No database == No data

- No backups

What we did to resolve the situation

Even when Google was telling us the backups were deleted when the database also was, and all the facts were pointing to this hypothesis, we were still stubborn and we didn’t give up. The GCP web UI gave us no chances to recover, so we decided to continue digging by using the CLI (gcloud). And this was finally the key to our success!

We had the theory backups should be still somewhere even when the docs say they shouldn’t, so we checked different kinds of storage places until checking the SQL backups section. It comes that we were quick enough when checked this section, and we also knew the name of the deleted database, so we were able to run the next command.

$ gcloud sql backups list --instance=deleted-db-name --project our-project ID WINDOW_START_TIME ERROR STATUS 1614876500000 2021-03-04T04:00:00.000+00:00 - SUCCESSFUL 1614765400000 2021-03-03T04:00:00.000+00:00 - SUCCESSFUL 1614654300000 2021-03-02T04:00:00.000+00:00 - SUCCESSFUL 1614543200000 2021-03-01T04:00:00.000+00:00 - SUCCESSFUL 1614432100000 2021-02-28T04:00:00.000+00:00 - SUCCESSFUL 1614321000000 2021-02-27T04:00:00.000+00:00 - SUCCESSFUL 1614210000000 2021-02-26T04:00:00.000+00:00 - SUCCESSFUL

And there they were still! After catching breath and getting our smiles back, the recovering process started. We still were not fully trusting this was going to work since maybe the backup list was there while no longer the data behind, but we had to give it a chance. We moved fast as we knew time was playing against us, so we immediately created a new database from scratch and just after we started the restoring process.

$ gcloud sql backups restore 1614876500000 --restore-instance=new-db-from-scratch-name --project our-project --backup-instance=deleted-db-name All current data on the instance will be lost when the backup is restored. Do you want to continue (Y/n)? Restoring Cloud SQL instance...done. Restored [https://sqladmin.googleapis.com/sql/v1beta4/projects/our-project/instances/new-db-from-scratch-name]

Finally, even when having positive feedback from GCP, we were still not fully believing this had worked. We needed to verify all the data was there, so we did. Fortunately again, everything was recovered. Our next and final step was to perform a SQL-dump in order to ensure we had a recent copy at another location.

Conclusion

Even after deeply searching on Google and not finding any helping results — as all of them say there’s nothing you can do — our knowledge and passion kept us continue digging on the topic until we found a way. We know we were lucky about finding the backups still there, but we know we also were quick, methodical, and obstinate when detecting and looking around to fix it. On the other hand, we have learned we cannot trust database backups performed by the provider, so we’re currently working on procedures to have backups in more places. We have pretty clear this is the first and the last time we are handling this topic.

Moreover, and as opposite to AWS, as we previously mentioned GCP strongly links the database resource to its backups. This has shown up it could be a huge problem when dealing with accidental deletion. Additionally, it’s not possible to copy SQL backups to any other kind of storage. Nevertheless, there are custom solutions consisting of regularly dumping the database and then storing it on a bucket, but it’s not something official.

On the other hand, we strongly advise being careful when having automated processes playing around. As we have seen, the only way to protect a GCP database against termination is to limit the permissions. So the way to proceed is to remove the DELETE permission from the (service) accounts the automated processes use.

Thankfully, you can always count on Geko team —a high-skilled engineering team— who will dig on the topic until getting it is easy and solved for you. Don’t forget to come back to the Geko’s blog and check out what’s new in here!